The EU AI Act and What it Means for Schools

An Overview of the Legislation and Its Implications for Different School Departments

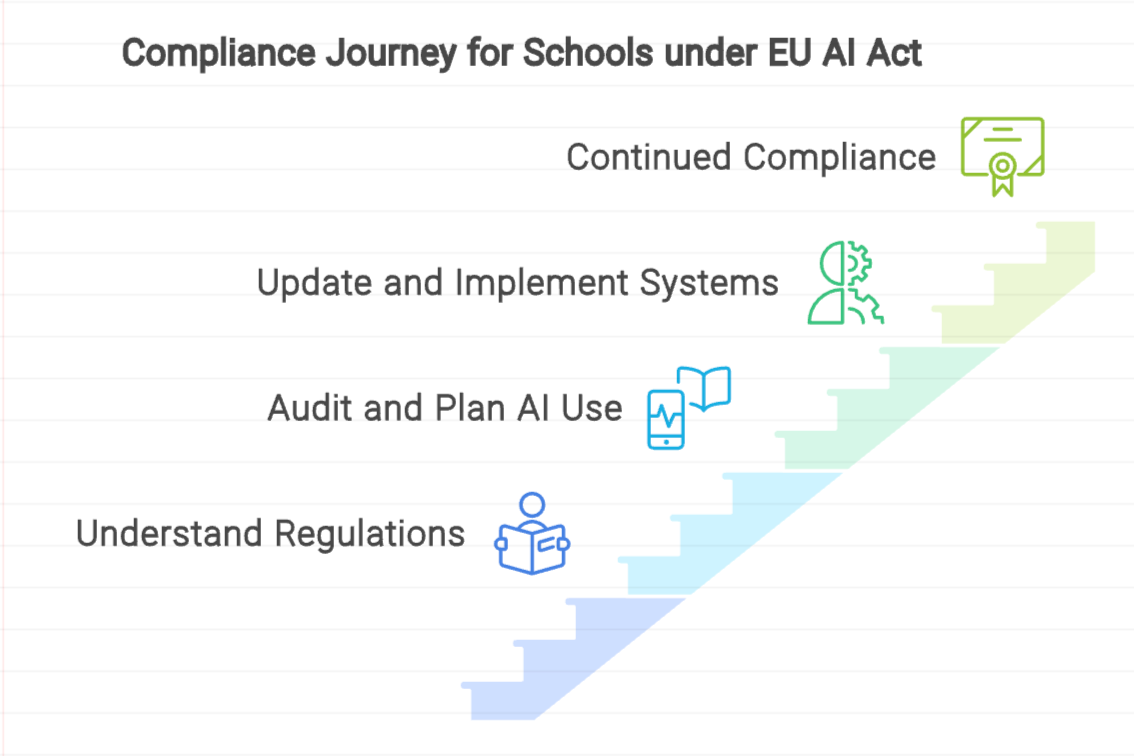

The EU AI Act has far-reaching implications for schools across Europe, which will help them ensure their use of AI systems is both effective and responsible. The goal of this post is to provide school leaders with an overview and general understanding of this legislation, as well as to dive into its practical implications for educational institutions.

Note that schools outside of the EU can decide to comply voluntarily with the requirements of its AI Act, in addition to their local regulations. For them, the following insights and actionable steps will help ride the AI wave safely, and leverage its momentum all while managing its ripple effects on a school’s administration and teaching and learning, as well as on its HR, Finance, IT, and Communications departments.

Providers and Deployers

Schools generally fall under the category of “deployers” of AI systems.

However, they could have to follow the more comprehensive and stricter regulations applicable to providers if they partner with them to develop AI systems, or if they do so directly themselves.

AI Literacy

The EU AI Act requires that schools train their employees in AI literacy.

“Deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used” [Article 4].

AI Risk Levels

The EU AI Act categorizes AI systems into 4 levels of risk and obligations.

Unacceptable Risk

AI systems presenting unacceptable risks are prohibited. This includes:

Manipulative, or deceptive techniques used to distort decisions and behavior.

Biometric categorization systems inferring sensitive attributes.

Compiling facial recognition databases

Inferring emotions in workplaces or educational institutions, except for medical or safety reasons.

High Risk

AI systems presenting high risks are regulated.

This includes individual profiling, i.e. automated processing of personal data to assess work performance, interests, or behavior, such as:

AI systems determining admission to educational institutions.

Setting learning outcomes, steering the learning process and assessing achievement.

Monitoring and detecting prohibited student behaviour during tests.

AI systems used for admissions, recruitment, selection, promotion, termination of contracts, task allocation, performance evaluation.

Note that data protection requirements are covered by other regulations, such as GDPR. However, AI systems create new opportunities and risks related to personal data processing.

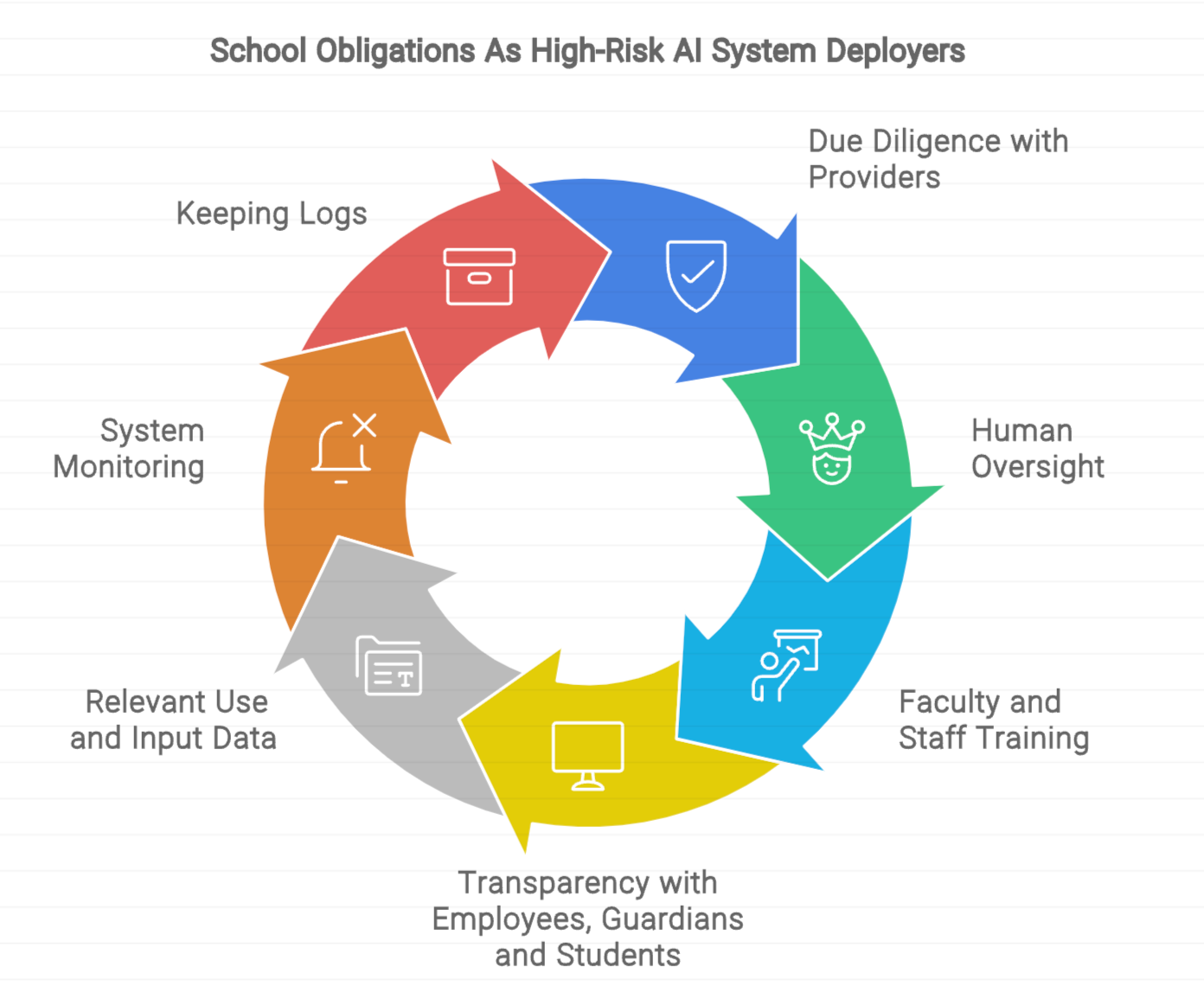

As deployers of high-risk AI systems, schools must:

Take necessary technical and organizational measures (due diligence, audit, training) to ensure they use appropriate AI systems appropriately.

Assign human oversight to personnel with the necessary competence, training and authority, as well as the necessary support.

Ensure that input data is relevant and sufficiently representative in view of the intended purpose of the high-risk AI system.

Monitor the operation of the high-risk AI system and, where relevant, inform providers and relevant authorities when they identify a serious incident or have reasons to consider it may present a risk.

Keep the logs automatically generated by that high-risk AI system to the extent such logs are under their control, for a period appropriate to the intended purpose of the high-risk AI system, of at least six months.

Inform the affected persons that they will be subject to the use of high-risk AI systems.

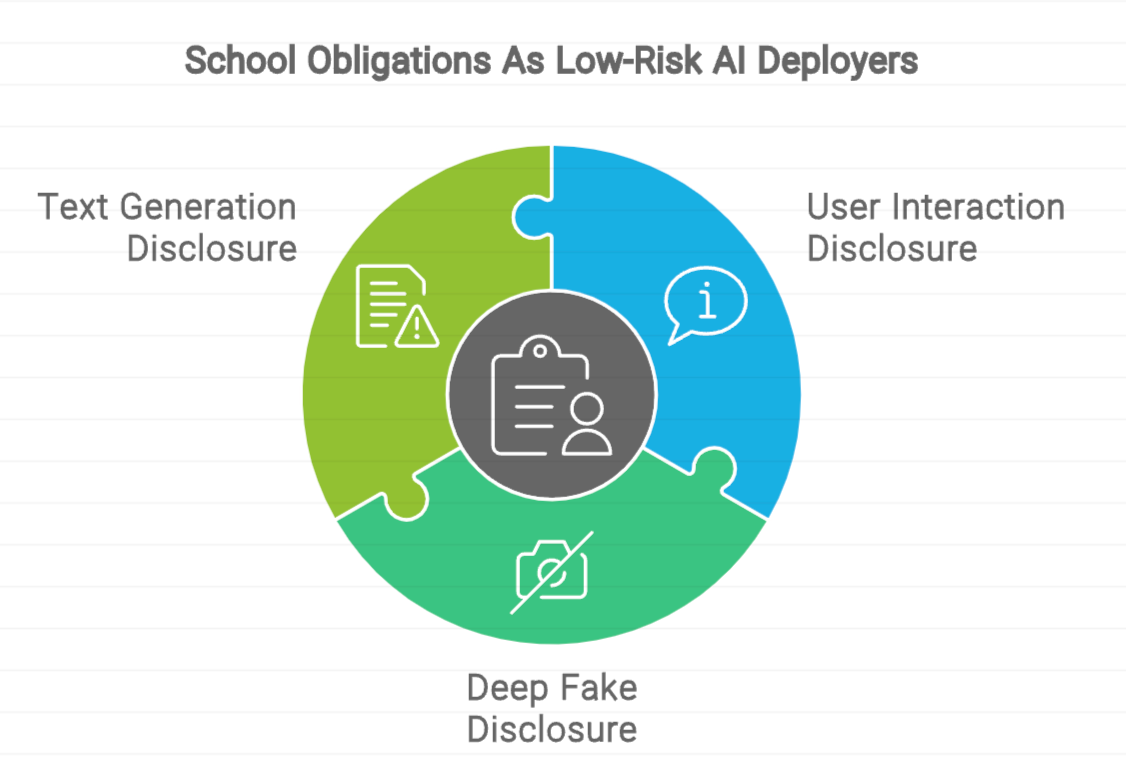

Limited Risk

AI systems presenting limited risks are subject to transparency obligations.

Schools must inform users when they are interacting with an AI system, unless it is obvious.

AI-generated or manipulated image, audio or video content must be disclosed as such.

AI-generated or manipulated text must be disclosed as such - unless subject to human review.

Minimal Risk

AI systems presenting minimal risks are unregulated.

This applies when AI systems only perform narrow procedural or assistive functions that do not substantially alter the input data, are not preparatory tasks to high-risk uses, or are subject to human review.

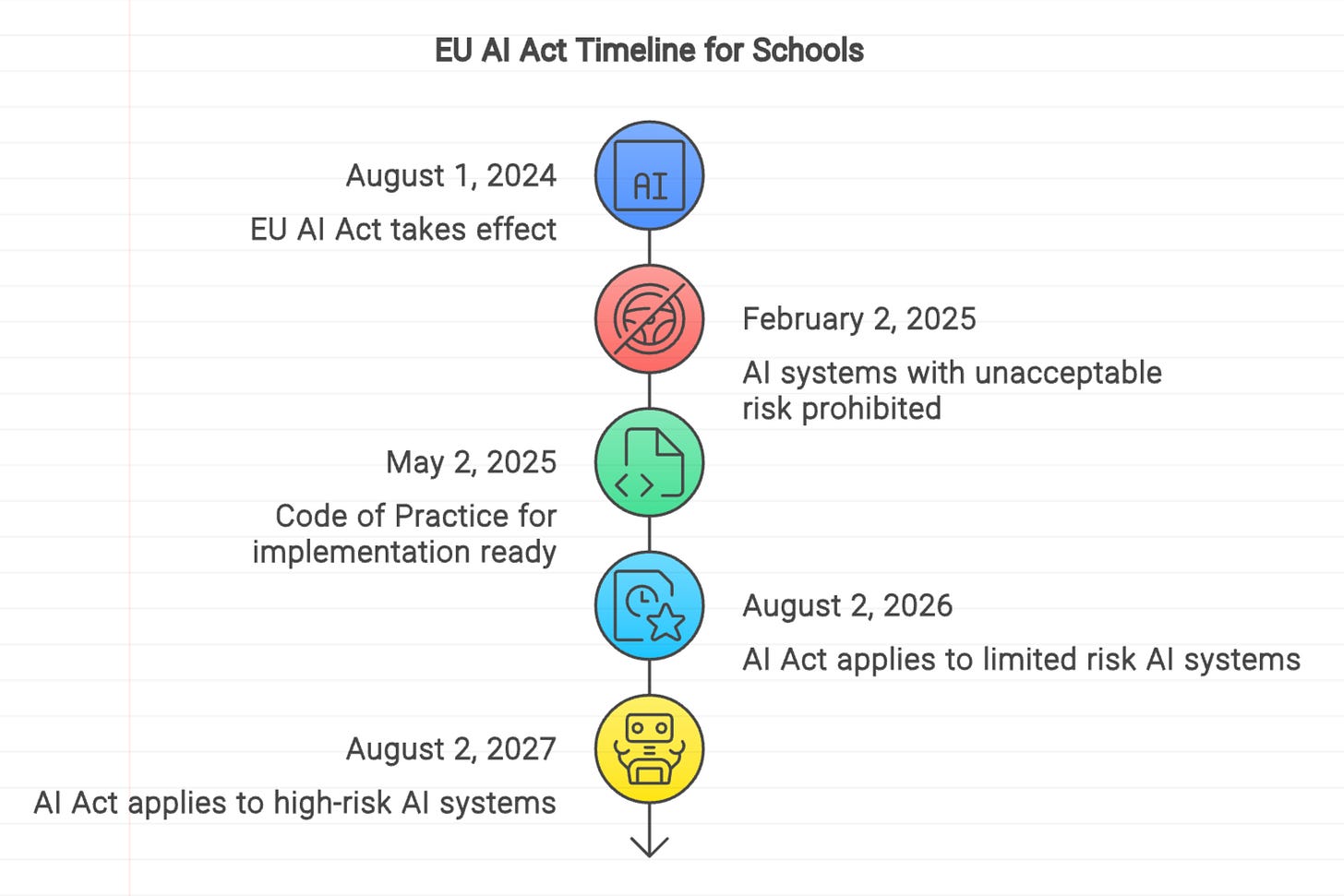

Timeline

Other Regulations

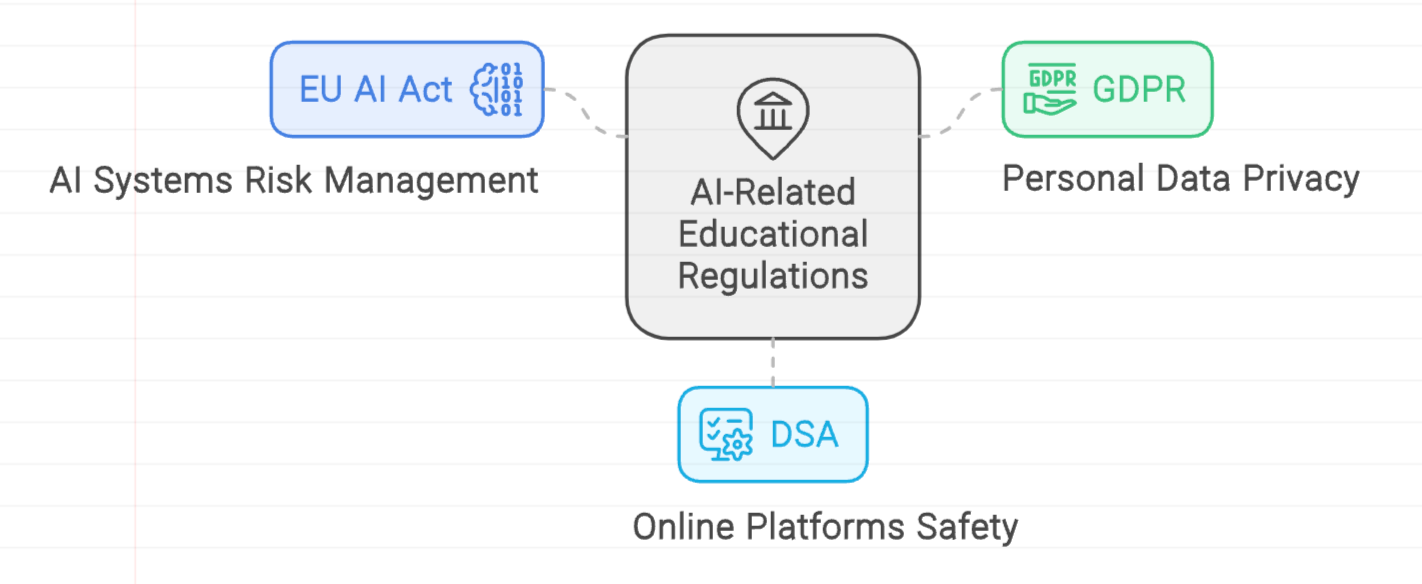

In addition to the EU AI Act, European schools must also comply with the GDPR and the DSA, especially when using AI systems that handle student data or online platforms for educational purposes.

Practical Implications for Different School Departments

Administration and Human Resources

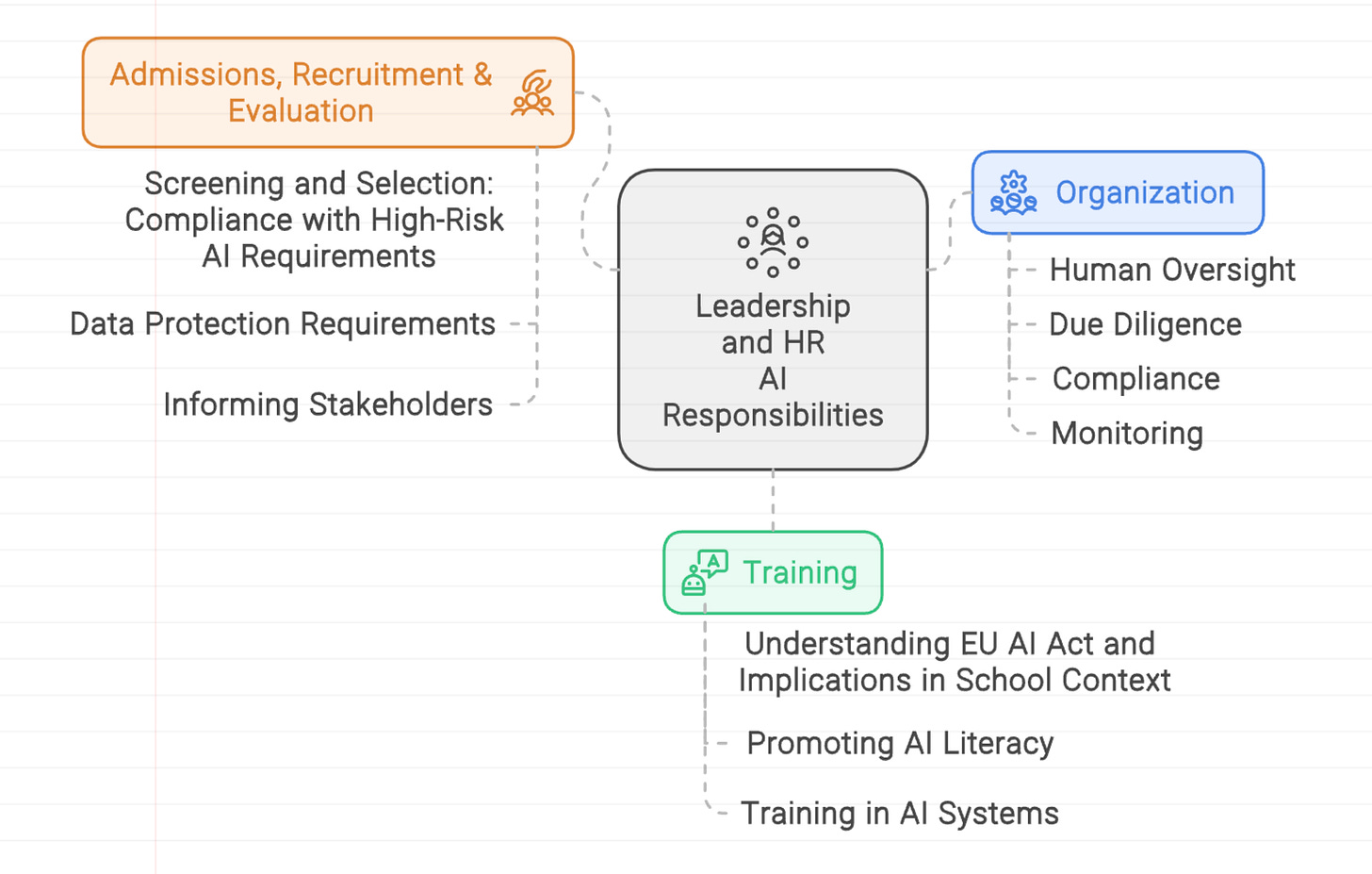

Organization:

Schools must assign human oversight and ensure due diligence, compliance, and monitoring

Training:

Schools must ensure relevant staff understand the EU AI Act and its implications in a school context

Schools must promote AI literact and ensure relevant staff are trained in the appropriate use of AI-powered systems

Admissions, Recruitment & Evaluation:

Schools must ensure any AI system used to screen, select, interview, promote, or evaluate people complies with high-risk AI (human oversight, data quality, transparency, etc.) and data protection (lawfulness, fairness, transparency, purpose limitation, minimization, accuracy, storage limitation, security)

Schools must inform families, candidates, and employees about the use of AI in admissions, recruitment, and evaluation processes.

Communication

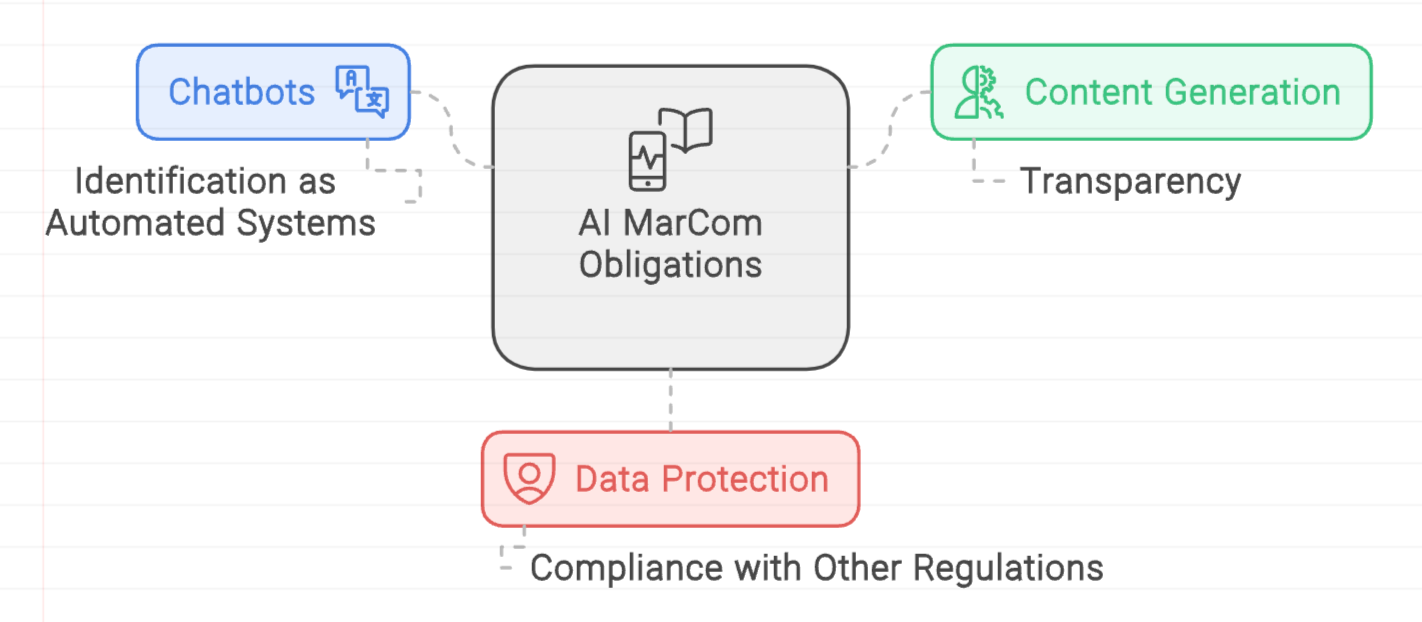

Chatbots: If using AI-powered chatbots for student or parent inquiries, schools need to ensure transparency by clearly identifying them as automated systems.

Content Generation: Schools must follow transparency obligations when using AI to generate content (text and illustrations on website or social media…)

Data Protection: In both cases , schools must protect personal data processed with AI systems, in line with relevant regulations, such as GDPR

Finance

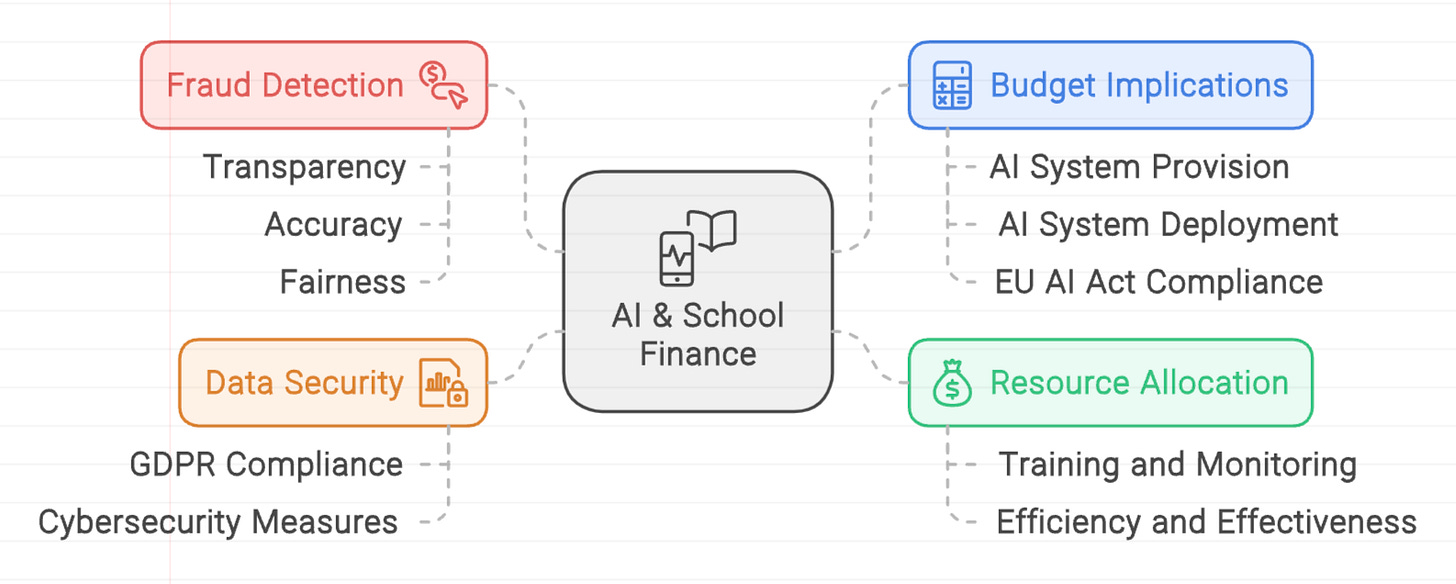

Resource Allocation: While AI systems can help make financial planning and resource allocation both more efficient and effective, their use requires appropriate training and monitoring

Fraud Detection: While AI systems can help detect financial irregularities, schools must ensure that these systems are transparent, accurate, and fair.

Budgetary Implications: Finance has a critical role to play to help predict and delineate the budgetary implication of both AI system provision/deployment and EU AI Act complicance

Data Security: Financial data is sensitive. Schools must comply with GDPR and implement robust cybersecurity measures to protect this data when using AI systems.

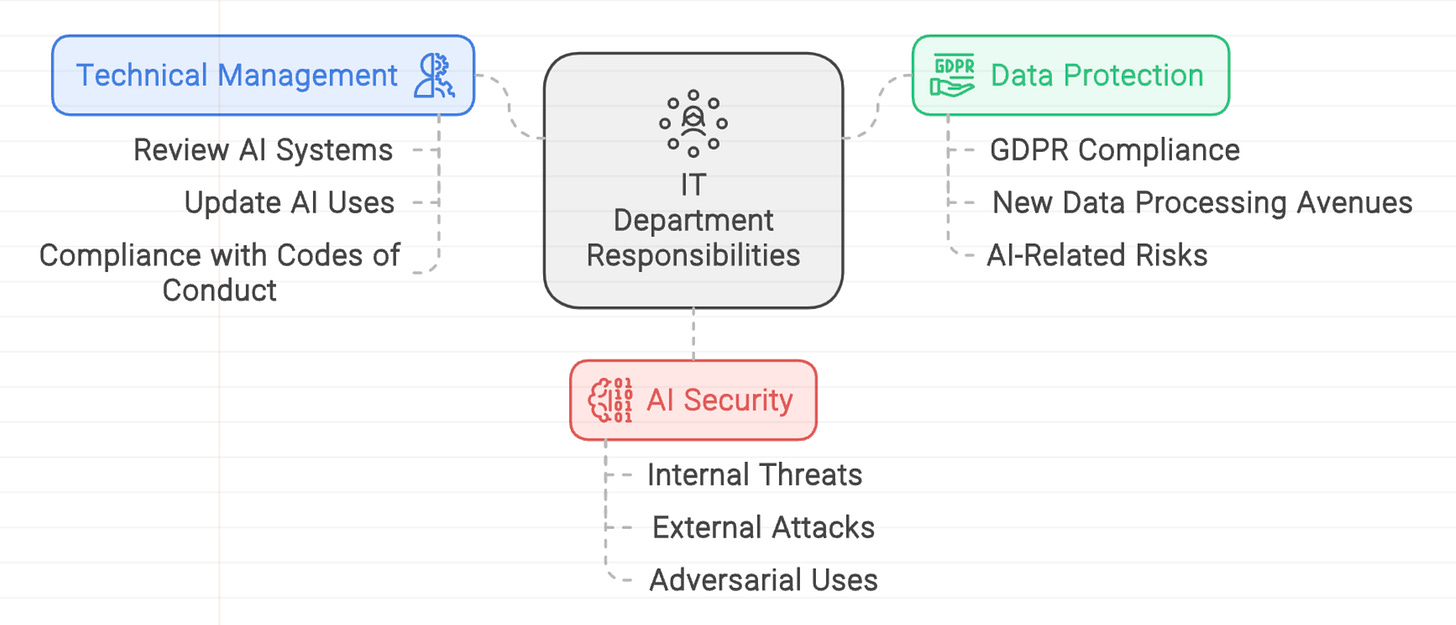

IT

Technical Management: The IT department will likely be tasked with the review and update of current AI systems and uses at school, as well as their compliance with legal requirements and codes of conduct

Data Protection: It will also likely be responsible for the continued compliance with GDPR within the new data processing avenues and risks related to AI systems

AI Security: It will finally be in charge of the security of AI systems, including protection against internal and external adversarial uses and attacks

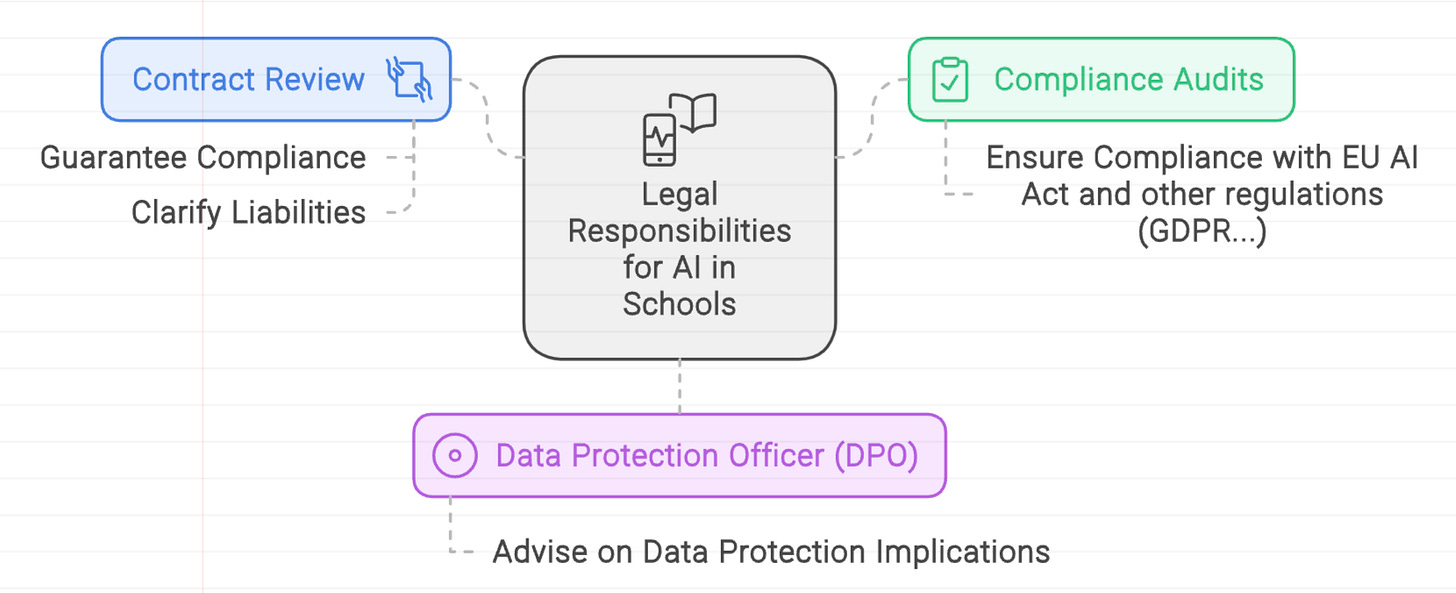

Legal

EU AI Act, Timeline and Penalties: Legal must familiarize itself with the new EU AI Act, its requirements, timeline, and penalties, and seek and disseminate expert consulting as appropriate

Contract Review: Legal must review contracts with AI providers to guarantee compliance with data protection requirements and clarify liabilities related to AI use.

Compliance Audits: The legal department needs to conduct initial and regular audits to ensure the school's own compliance with the EU AI Act (and other relevant regulations, such as GDPR)

Data Protection Officer (DPO): While data protection is coverd by other regulations, such as GDPR, AI systems present new data processing avenues and risks, which affect all school departments (administration, HR, finance, teaching and learning, IT…)

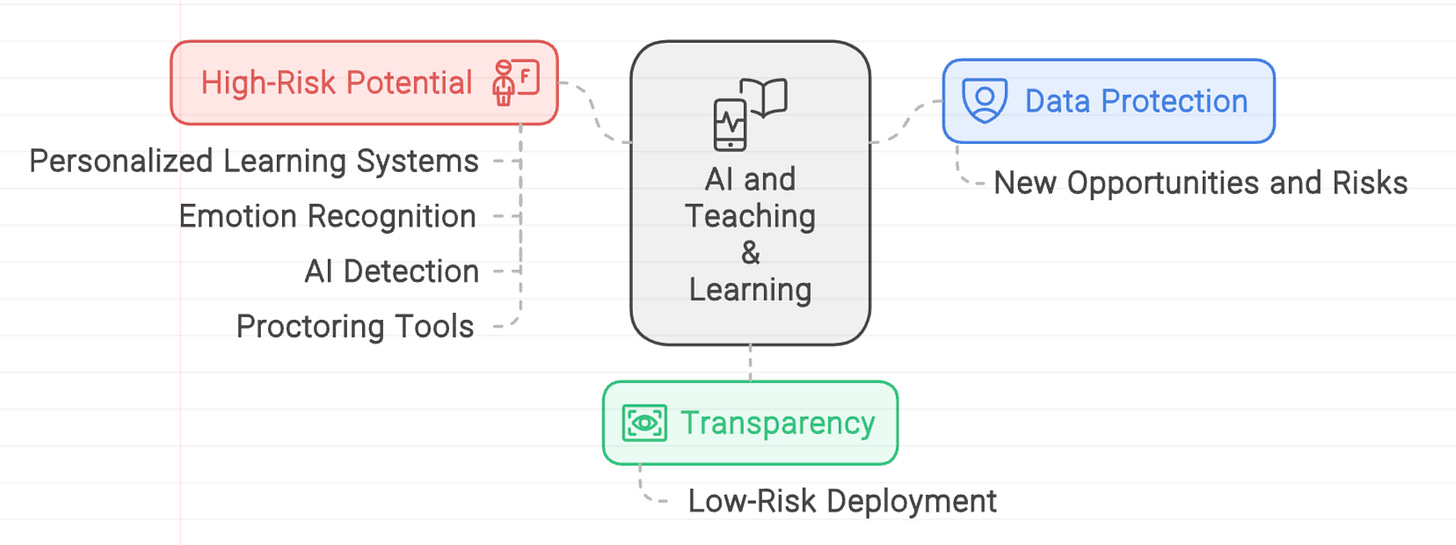

Teaching and Learning

High-Risk Potential: AI-powered personalized learning systems need to follow all obligations attached to high-risk deployment if they significantly influence educational pathways or assess student performance. This also applies to emotion recognition, AI detection, as well as AI-based proctoring tools.

Data Protection: While covered by other regulations such as GDPR, AI systems create new opportunities and risks related to personal data processing, especially in the context of personalization and assessment

Transparency: Even low-risk deployment requires transparency

Conclusion

The EU AI Act marks a turning point in the use of AI systems, particularly in a school context. While AI technologies offer exciting opportunities to enhance virtually all school operations, their power and novelty also come with considerable risks, and thus call for high levels of scrutiny and responsibility.

Compliance with the EU AI Act, either mandated or voluntary, will help schools follow best practices throughout the organization and ensure they are positioned to harness this new energy safely and effectively.

Disclaimer

The content of this blog post, including practical recommendations, are provided for information purposes only. Schools should seek legal advice from licensed experts to ensure compliance with official regulations.

Reference

Learn more about EU AI Act from the European Commision here.