Pedagogical AI-Integration: a Step-by-Step Process

How can we leverage the full potential, and avoid the considerable risks, of AI-integration in the classroom?

The goal of this article is to provide a model (and app) helping educators leverage the potential of AI integration in the classroom, all while avoiding its risks.

While a previous article focused on assessment, we will now look more specifically at instruction.

This model does not only list do’s and don’ts derived from current research (see this recent review by Philippa Hardman) and my own professional experience: it also integrates them in an easily applicable step-by-step process.

As one would expect, the general idea is quite simple: AI integration should always help advance, and never undermine, best teaching and learning practices.

Why integrate AI - The Potential

There are at least three different reasons why teachers might want to integrate AI into their professional practice.

First, for efficiency gains. While legitimate in its own right, it won’t be covered here, as we are focusing on “student-side” AI integration.

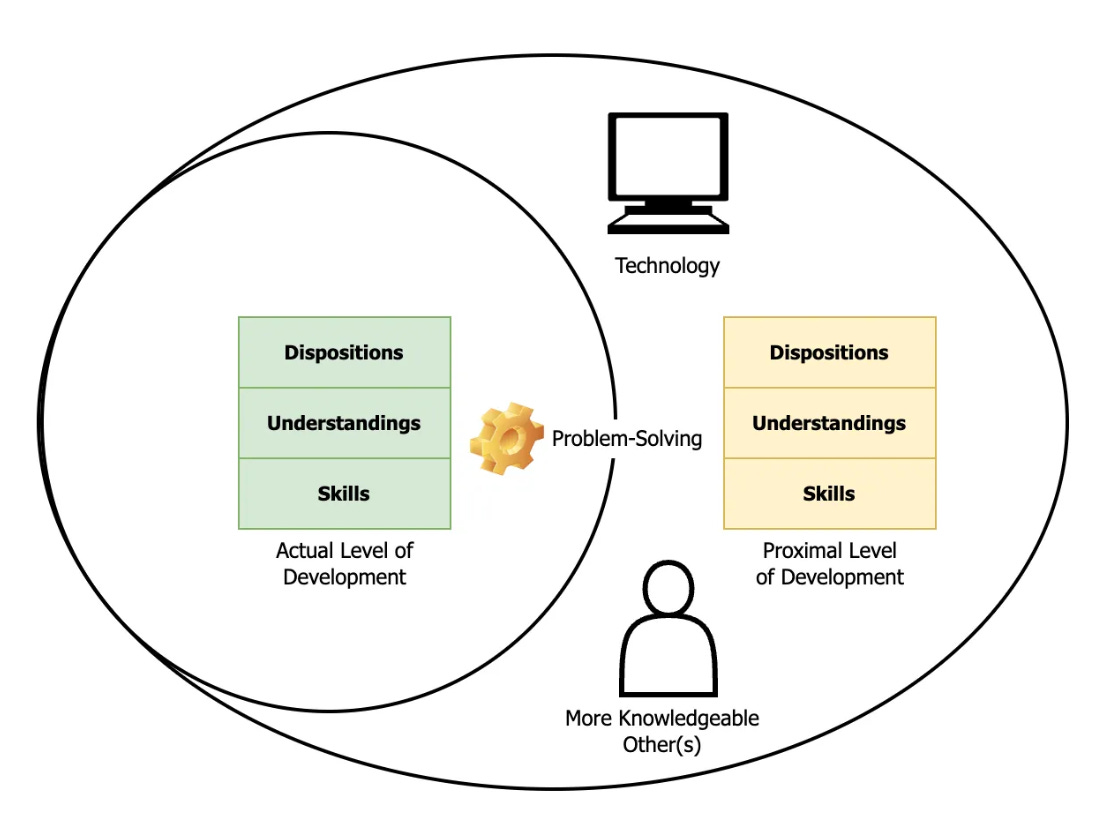

Second, for pedagogical gains. As I explained elsewhere, the paradox of education is that we learn by doing what we are learning to do - and thus can’t really do yet. Good pedagogical practices help solve this paradox and, as Vygotsky explained, tech-integration can be part of the solution.

In his view, education involves helping students progress from their ‘actual’ to their 'proximal level of development' by proving the support needed for them to learn to solve, with the assistance of technology and the guidance of a more-knowledgeable-other, problems they cannot tackle on their own yet.

Third, for future-readiness purposes. Indeed, AI integration can not only serve pedagogical purposes: it is also a crucial learning outcome in and of itself, given the growing need for students to master these new technologies and develop the literacy needed to use them effectively and responsibly.

Why not integrate AI - The Risks

Given its potential benefits (and growing pervasiveness), failing to integrate AI would create an opportunity gap for our students.

However, unless it is properly planned, AI integration can undermine the learning process, and create more problems than solutions.

Based on current research and first-hand experience, it seems like these risks can be classified into three categories. Indeed, AI “dis-integration” can:

Decrease student engagement:

To put it simply, Self-Determination Theory explains that motivation is driven by 3 factors: Autonomy, Relatedness, and Competence. The challenge is thus to provide the right kind and level of support (relatedness) to allow students to accomplish (competence) by themselves (autonomy) new tasks they are not capable of yet.

And the risk is that improper AI integration ensures pseudo-success — at the cost of both autonomy (by completing the task for the student, taking away their agency) and relatedness (by cutting them off from teacher and peer support)

Impair the acquisition of foundational knowledge and skills:

Improper AI integration might ensure “success”, but impair competence by preventing the development of the new understandings and skills required at the “proximal level”. For instance, it might help students outline limitations of the GDP, and even feel like they understand them, without truly grasping this concept.

This is always a risk when “scaffolding” student learning, as the only way to help them perform tasks they are not capable of yet is to support their cognitive lift. Well done, this helps optimize and focus the cognitive load. But the risk, with AI technologies, is to offload and automate the entire mental operation.

Prevent the development of higher-order understanding and skills:

By providing simple solutions to complex problems, improper AI integration risks over-crowding mental effort, discouraging actual problem-solving, creative and critical thinking.

Even more so, it might prevent meta-cognition and the necessity for students to think about their thinking to approach novel situations by setting goals, reflecting on what they do not know yet, devising plans to bridge this gap, etc.

How to Integrate AI - The Solutions

Having a clear idea of both the potential and risks associated with AI integration, we can now layout the steps to take (and the ones to avoid) to leverage this technology to the fullest extent, all while avoinding its pitfalls.

Step 1. Define the Learning Objectives

AI integration is successful if it helps students achieve learning objectives. Thus, any successful AI integration plan must begin by clarifying what it is exactly that we are aiming for students to be able to understand and/or do at the end of a lesson or unit.

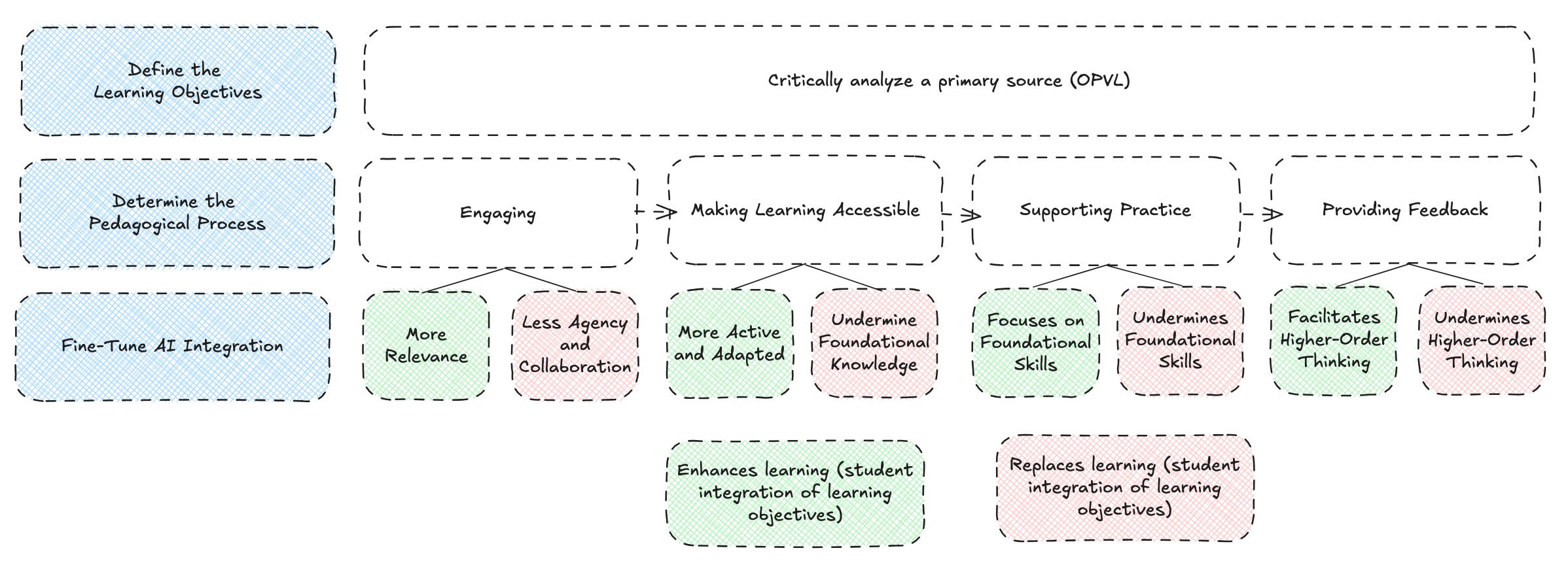

For our worked example, let’s say that how goal is for them to “be able to critically analyze a primary source”.

Step 2. Determine the Pedagogical Process

To be successful, AI integration needs to be synonymous with the implementation of effective pedagogy. As revolutionary as they are, these technologies do not change the art and science of teaching and learning. AI simply enhances (or undermines) our ability to follow sound practices in our classrooms — depending on how we use it.

This will look different in different schools, which might follow Rosenshine, Gagné, Marzano, or any number of philosophical approaches.

For our worked example, let’s say that our theory is that learning requires 4 steps:

Engaging and directing students’ attention

Making new ideas accessible

Supporting practice

Provinding feedback on process and performance

As can be seen in the diagram below, AI integration can either enhance, or undermine, each of these steps.

Step 3. Fine-Tune AI Integration

Let’s look at them one by one, so we can fine-tune AI integration for educational purposes.

Please keep in mind: this is just a worked example - steps will look different in your context.

Engaging and directing students’ attention

How might AI integration help make these learning objectives more relevant to students? Connecting with their backgound, interests, and real-life situations is often effective — as is creating simulations, or challenging students with projects and performance tasks.

Importantly, AI integration should not diminish students’ interest in the learning experience (e.g., by replacing effective and beloved methods). If a “jigsaw” has been successful in the past, one might consider ways to enhance (but not replace) it with AI.

Making new ideas accessible

How might AI integration help make the new learning more accessible, whether it is by clarifying prerequisites, breaking down steps, or providing additional supports?

Might this step be integrated with the next one, so that learning is active (and potentially collaborative, as well as adaptive?)

Supporting practice

How might AI integration make learning more active? How can we ensure it does not take away student agency or collaboration? How might it make learning more adaptive? How can we ensure it does not undermine the development of foundational understandings and skills?

To answer these questions, proper AI integration requires that we break down, just like we did for the entire pedagogical process, this third step. How do students become able to critically analyze a source? Let’s say that they need to:

Understand the elements of the OPVL method

Apply the model to a source

Simply hearing a teacher explain the elements of OPVL (the origin, purpose, value, and limitations of a source) will likely not be as effective as using them to analyze a personally interesting document for an exciting project. However, it is not possible to apply this framework autonomously without first understanding it. AI can help solve this problem, as we can use it to break down the process and automate fewer and fewer operations as students perform more and more complex ones.

Individually, in pairs, or in small groups, students can:

Instruct an AI to apply OPVL to a source and explain each element of the model in this context. “Reasoning” models, which make their “thinking” explicit, are particualry useful for this.

Generalize from this example a prompt clarifying for themselves what each element of OPVL means - thus engaging in metacognition and making this thinking visible and ready for transfer

Note that, before moving to the next step, students could test whether this prompt yields better results, with a smaller model, than basic instructions. This would be an example of “teaching AI” and work in important AI literacy considerations and, by exercising critical thinking (evaluation), help them deepen their understanding of OPVL (and of AI technologies, including which tools to use for what purpose, when and how).

Note that it is also possible to use this step to demonstrate the limitations of the technology, reflect on the risks of over-reliance, and derive appropriate use strategies.

Likewise, asking students to try an apply OPVL right after using AI to do so, and before using AI to actually learn how to do so, can help demonstrate the risks of “offloading”.

Provinding feedback on process and performance

At the previous step, the objective was only for students to understand OPVL - not to be able to apply it themselves. This becomes the objective at the next step, as students follow their own prompt, while AI is now tasked with providing feedback.

Just like the previous step ensured that students learn foundational skills, engage in meta-cognition, and practice higher-order skills such as critical thinking, this new step guarantees that they learn how to achieve the learning objective autonomously.

As needed, adaptive support can be provided here, the AI providing hints, guiding practice through questions, or addressing necessary clarifications — provided these scaffolds are gradually removed.

Neither researching a relevant primary source, nor turning its analysis into a clear and organized paragraph, being the learning objective for this particular lesson, it is completely fine (at this time) if students rely on the AI for both. All that matters is that they develop these skills later on with a similar process.

However, if they do submit an AI-generated paragraph based on their own analysis (e.g., as a formative), it is important that they reflect on this process, including the role played by AI and what they can learn from it - which helps develop academic honesty.

Further skill development can also be an extension opportunity for fast learners. Those who have already mastered OPVL analysis might “extend” it by learning to research high-quality primary sources.

Another option is to allow these students to explore advanced AI literacy skills. For instance, they might brainstorm what a comprehensive source analysis workflow would look like (and even learn to design it) - e.g., a multi-agent system researching high-quality sources, analyzing them from multiple perspectives, reviewing drafts for bias, etc.

Step 4. Review

Once AI integration in a particular lesson or unit has been properly planned, it is important to review it to double-check:

That no major opportunity to leverage these technologies and enhance student learning has been missed

That none of the planned uses risk:

Undermining engagement, agency, or relatedness

Preventing the acquisition of foundational understanding or skill

Discouraging the development of higher-order and meta-cognitive skills

Impeding student access to successful autonomous performance

There’s an App for That

While there is a broad consensus that AI integration presents both great opportunities and great risks in an educational context, concrete, actionable models helping take advantage of the former, all while preventing the latter, are still lacking.

A follow-up to a previous piece on fine-tuning assessment for AI adaptation, this article was an early attempt to outline a step-by-step process that can help “fine-tune” AI technologies for educational purposes. A major takeaway is that, while inherently neutral, integrating these tools into the classroom might be a disservice to our students — unless this integration is properly planned and synonymous with the implementation of sound pedagogical practices.

An early version of what an app could look like that would help teachers use AI to fine-tune AI integration can be previewed here.