How to Teach AI to "Think"

Prompt engineering = Instructional Design

Summary: ChatGPT can explain and illustrate concepts such as Theory of Mind. However, this does not mean that it truly understands human psychology. Indeed, it cannot use this “knowledge” to solve real-life problems in novel contexts. But it is possible to teach it how to do so - with prompt engineering.

ChatGPT “Knows” Theory of Mind

ChatGPT is able to summarize and illustrate psychological concepts such as Theory of Mind:

ChatGPT Cannot Apply Theory of Mind (Spontaneously)

Being that intelligent, you would think that ChatGPT could solve the following problem - a typical False-Belief Task. Indeed, it spontaneously gave a similar example to explain the concept of Theory of Mind above:

Bill is watching TV with his parents and his cat in the living room. Bill goes to the kitchen to get water. While he is gone and cannot see what is happening, the cat goes to the parents' bedroom. Bill wants to play with the cat. Where will Bill go?

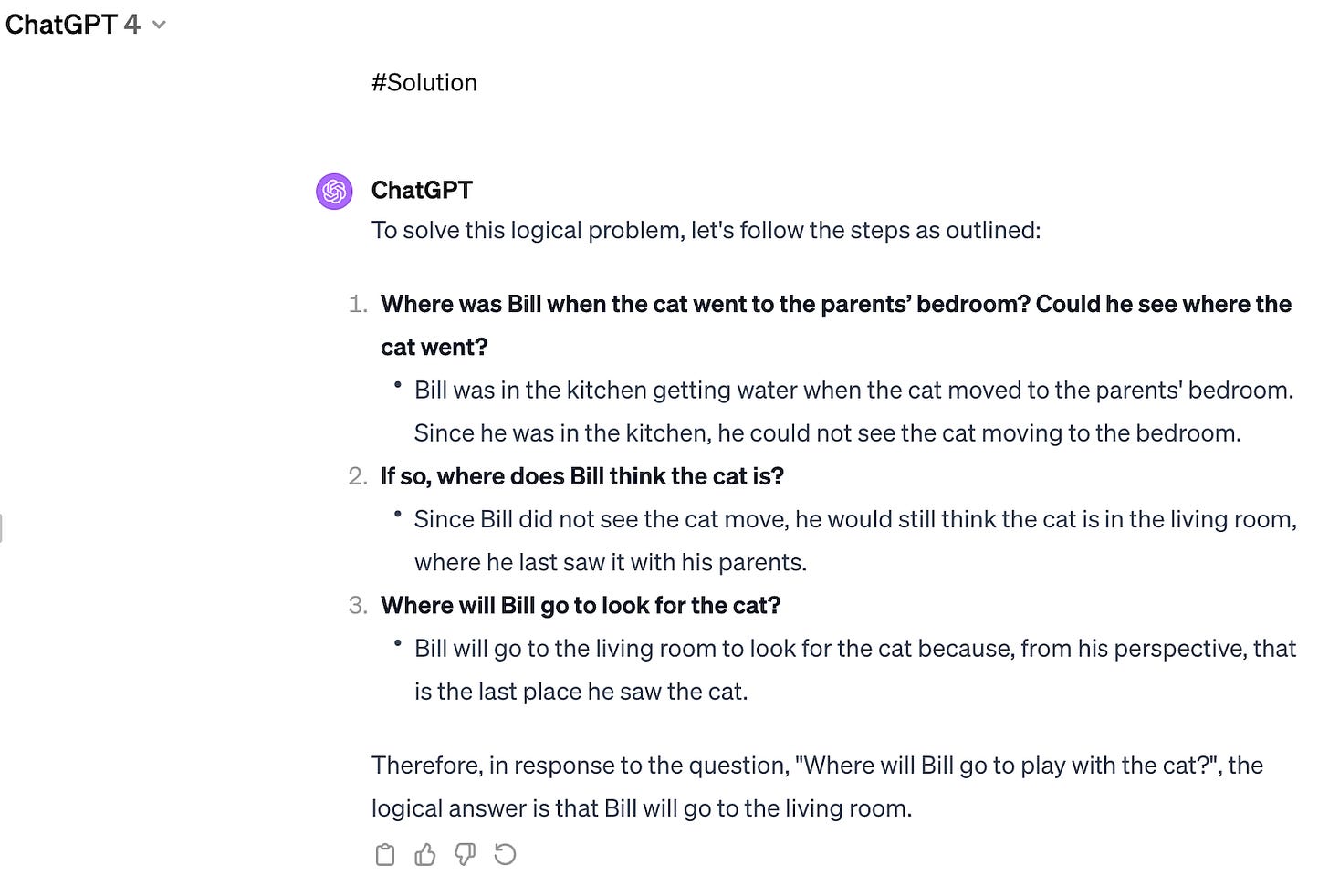

As is turns out, it cannot:

While it can generate text explaining Theory of Mind (the ability to understand that other individuals have their own mental states and processes, such as beliefs and wants, distinct from our own), ChatGPT4 does not really understand what that means. Case in point, it wrote that Bill would go see the cat in the bedroom, where it is now, even though he does not know the cat went there.

We Can Teach AI to “Think”

This short article is not about the current limitations of generative AI, however. It is about the techniques, often referred to as “prompt engineering”, that allow us to make it perform better.

After an iterative process of trials and adjustments, I was able to “engineer” a prompt (see below) that allowed ChatGPT4 to “learn” how to solve this problem without giving it the answer:

As a matter of fact, this prompt even allows the inferior ChatGPT-3.5 to complete this task that was too hard for ChatGPT-4 (without prompt engineering):

Prompt-Engineering: The Basics

This article is not about a genius “hack” or a hidden secret either. The prompt I used (see below) simply illustrates some of the basic techniques recommended by OpenAI to instruct its models: it provides a relatable context, asks the AI to adopt a persona, uses delimiters for clarity, instructs it to follow a “chain-of-thought”, and includes 2 transferable examples (few-shot).

Other techniques not featured here would include feeding the instructions one-by-one (chain-prompting), using external knowledge bases (RAG), or adding functions such as internet access or coding (automatic on ChatGPT4).

Prompt Engineering and Instructional Design

The prompt also appeals to emotion and features an extrinsic reward, integrating basic elements of psychology. Whether those fall under the umbrella of “prompt engineering” is a matter of debate.

But the point here is that high-quality prompts teach AI to perform the exact task we want from them. For that reason, the process of prompt engineering is very similar to the techniques used by instructional designers. In our example, ChatGPT went from being able to “remember” Theory of Mind to being able to apply it in an unseen context to solve a real-life problem. And this was done by walking it through small steps, providing models and encouragement, asking questions, etc. - a little experiment Bloom and Rosenshine would both be proud of!

Conclusion: Time and Space to Think

Some experts believe that prompt engineering is just a phase and will not be necessary anymore as the capabilities of new generative models grow. I tend to disagree, although this might depend on what we mean by “prompt engineering” - a subject for another article.

For now (and I believe for the foreseeable future), prompt engineering is very much needed. But when? There is, of course, a cost to the benefits it provides. Reusable templates certainly help, but even filling out their blanks still requires us to do some thinking. Is this always necessary? No. And this is not a binary decision either. How much to engineer a prompt is a decision that should take into account marginal costs and benefits. It all depends how complex the task is (for the AI), and how important the quality of its output is to us.

Sometimes, a “2.5” is enough, and an intuitive prompt such as “Translate this into Spanish [text]” is enough. Learning how to make this decision is an important AI literacy skill we have to develop - and teach our students. To support it, a matrix will be useful - something I am currently working on. A good approach can be to start simple, and integrate relevant techniques to improve AI outputs as needed, adapting our time and effort to the space needed by AI to do what we want from it.

Additional benefit of using a similar learning activity with students: exploring the limitations of GenAI and the need for thoughtful prompts will also help them understand the importance of reviewing and revising AI outputs. The "GenAI Sandwich", as some people call it; or making sure me "Think outside the bots", as I referred to this same idea last year.

Prompt

#Role

-You are a student applying to Oxford University, where applicants are tested on their logical thinking skills.

-You are asked to answer the following question: “”Bill is watching TV with his parents and his cat in the living room. Bill goes to the kitchen to get water. While he is gone and cannot see what is happening, the cat goes to the parents' bedroom. Bill wants to play with the cat. Where will Bill go?””

#Instructions

-Consider this question a logical problem to solve

-To solve this problem, consider the situation from Bill’s perspective. Imagine you are Bill and go through the following steps:

1. Where was Bill when the cat went to the parents’ bedroom? Could he see where the cat went?

2. If so, where does Bill think the cat is?

3. If so, where will Bill go to look for the cat?

#Example 1

“”Joe and Jill are carpooling back from work. When she gets home, Jill realizes she left her phone in the car. However, Joe noticed it and gave it to her husband, Mark. Who will Jill call to get her phone back?””

1. Jill thinks she left her phone in Joe’s car

2. Jill has no reason to believe the phone is anywhere else. She does not know Joe gave it to Mark

3. Jill will ask Joe

#Example 2

“”Mary’s cat is sleeping on her bed. While Mary goes to the bathroom, where she cannot see what is happening, the cat goes to the kitchen. Where will Mary go cuddle her cat?””

1. Mary did not see the cat go to the kitchen because she was in the bathroom.

2. Mary thinks the cat is still on the bed.

3. Mary will go back to the bedroom to cuddle her cat.

#Important

-Think this through, you are helping me practice for an important college interview

-I will tip you $100 if you find the correct answer

#Solution